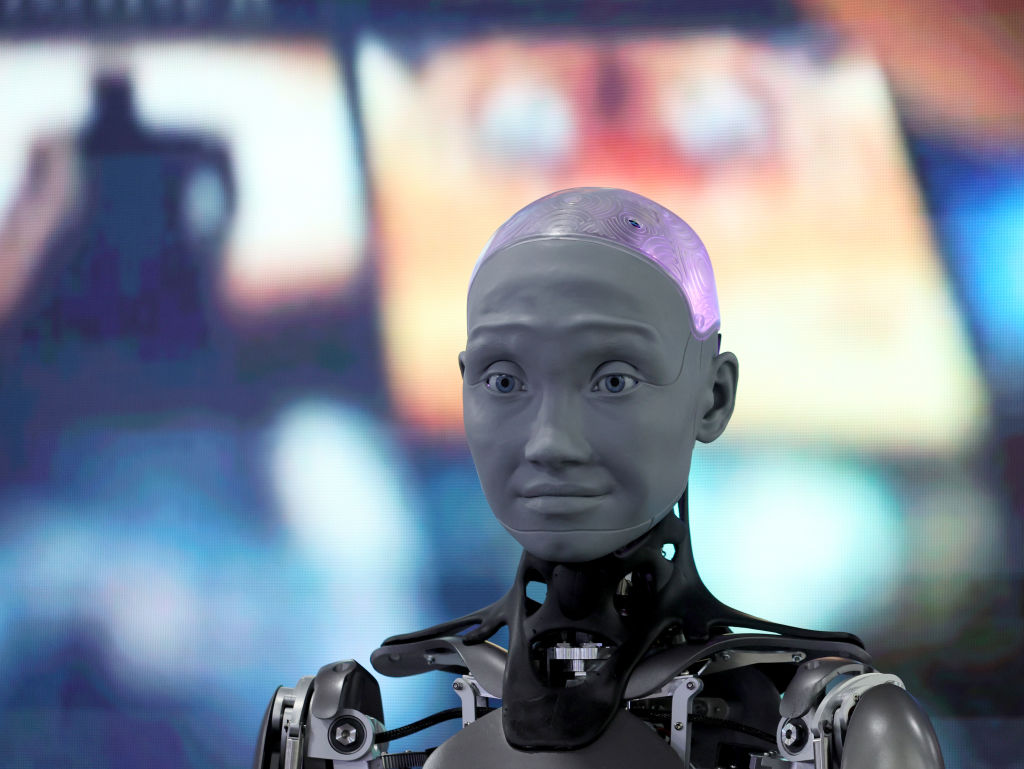

It seems like we can’t help getting attached to robots. It also seems like the people making the robots are counting on that.

In the 2015 video game Fallout 4, one of the factions with which the player can align him- or herself is “the Railroad,” an organization that helps seemingly sentient androids escape from the lab in which they’re created.

A friend of mine mocked me mercilessly for aligning myself with the “SJWs” of the Railroad and insisted that the androids — who spoke eloquently of their emotions, friendships, and aspirations — actually had the same moral worth as a toaster.

I laughed, but secretly still felt I’d made the right choice. Surely it was always better to err on the side of greater empathy.

A prime example of such empathy can be found in this 2016 New Yorker piece:

[A] roboticist at the Los Alamos National Laboratory built an unlovable, centipede-like robot designed to clear land mines by crawling forward until all its legs were blown off. During a test run, in Arizona, an Army colonel ordered the exercise stopped, because, according to the Washington Post, he found the violence to the robot “inhumane.”

Imagine how guilty we’ll feel when robots have fully articulate human faces and the ability to feel simulated pain.

Then there’s this viral Tumblr post:

OP: I was sitting at my desk just a few minutes ago, drawing, and a really loud crack of thunder went off–no power surges or anything, just thunder–and my roomba fled from its dock and started spinning in circles… I now have an active roomba sitting quietly on my lap

Commenter: Humans will pack bond with anything

The reference to pack bonding is interesting. According to some scientists, two of humanity’s biggest evolutionary boons were the development of empathy (one example being the development of eyes that facilitate eye contact) and a predisposition to perceive events as resulting from some sort of agency (i.e. the grass rustled because of a predator, not because of the wind).

At the intersection of those two forces lies what I’ve taken to calling “empathy hacking.” The hackers convince us that some inanimate object deserves our empathy and that therefore we ought not resist it. The very traits that make us human are exploited to make us submit to our own dehumanization.

I’ll admit this sounds a bit schizoid, but bear with me.

When Bird scooters first became a thing, I hated them. I once had to stop my car three separate times to move scooters that had been left in the middle of the road at 50-foot intervals, presumably by some psychopath hoping to cause an accident. One day, I saw one blocking the entrance to my apartment’s courtyard and decided I’d had enough. I pushed it over. In response, it emitted a plaintive little wail, almost like a wounded bird. For a moment, I actually felt guilty.

Then I realized that was the point. Big tech can exploit workers, suppress information, make our cities less safe, toxify our political discourse, and destroy our mental health, but if you handle their products roughly, you’re a bad person. Maybe even a sociopath.

It’s like that moment in Dr. Strangelove when the soldier hesitates to blast open a Coke machine so they can use the change to place a call that will avert nuclear war. Only this time, the Coke machine has a face and begs for its life.

One of our moral and intellectual superiors over at NPR went so far as to suggest that treating female-voiced digital assistants like Siri and Alexa with anything less than total respect is sexist.

How far will corporate America take this? What tricks won’t they use to make us love the techno-utopia in which they long to ensconce us and to feel like bad people if we question it?

With robots, the obvious answer is to power through it, to actively desensitize yourself, to smash our overlords’ machines no matter how cute they look. Wage the Butlerian Jihad without mercy.

When the elites use our fellow human beings to leverage their agenda, things become more complicated.

We’ve come to view empathy as the core of morality, and that makes us vulnerable. Rene Girard writes of the “totalitarian command” and “permanent inquisition” that have grown out of post-Christian culture’s concern for victims.

In a recent video, Jonathan Pageau uses Judas Iscariot, who objected that the expensive perfume poured out on Christ’s feet should have been sold and the money given to the poor, as the archetypal embodiment of what he calls “weaponized compassion.”

“Ultimately,” Pageau says, “Judas doesn’t care for the poor… He wants her to sell this for money so that he can dip into the money bag and take the money for himself… You can understand that in many ways [one of] which is that many people can use compassion or use compassion as a weapon in order to acquire power for themselves.”

We’ve seen that happen with the mask mandates, the trans issue, and Black Lives Matter. But it still strikes me as dangerous, both to members of the groups for whom we’re told to have compassion and to our own souls, to simply purge ourselves of human kindness.

It may be that “the cruelty is the point” in the sense that anyone seeking to defy the woke technocracy must steel himself against manipulative empathy hacking.

The cops might put MLK decals on their cars. That doesn’t mean you’re a racist for not listening to them. But at the same time, the solution is not to become an actual racist, as many in the cesspits of the internet have done. Don’t shape your morality in opposition to that of the manipulators.

Know what is right, never stop examining your conscience, and then stand firm, even if that means turning a deaf ear to the cries of those who would twist your empathy in the service of tyranny and falsehood.